| Sampling strategy | Description | Likelihood of selection bias |

|---|---|---|

| Simple Random Sampling | Every participant in the population has the same chance of being selected for the study. However, it is usually difficult to achieve this approach in practice since it requires a complete list of the population, also known as the sampling frame, which is difficult to obtain. Also, in very small samples it can happen that small subgroups in a population might get missed as their chance of being included is also small. | Low |

| Stratified Random Sampling | The population is divided into subgroups, so-called ‘strata’ from which participants are selected using random sampling. This technique ensures that all subgroups of a population can be included, regardless of their size. In those scenarios, this technique can be superior to simple random sampling. | Low |

| Cluster Sampling | The population is divided into clusters (e.g., countries, schools, departments, etc.), and a random sample of clusters is selected. After that, either all individuals in the chosen clusters are asked to participate (i.e., one-stage cluster sampling), or a random sample is drawn from within each of the sampled clusters (i.e., two-stage cluster sampling). This technique is often used in large and geographically dispersed populations to make the research more feasible. | Low to Moderate |

| Quota Sampling | The population is divided into subgroups (quota classes) and convenience sampling is used to fill quotas for each subgroup based on specific characteristics. A main difference of this technique to stratified sampling is that non-probability techniques are used to choose members for the subgroups. | Moderate |

| Purposive / Judgement Sampling | Participants are selected based on specific criteria that the researcher believes are important for identifying individuals who are most relevant to the study. | Moderate to High |

| Snowball Sampling | Future participants are chosen based on recommendations from current participants. For example, after conducting an interview, the interviewer might ask whether they can recommend other possible participants. | High |

| Convenience Sampling | Participants are chosen because they are easy for the researcher to access and are willing to take part in the study. | High |

| Self-selected Sampling | Individuals choose by themselves whether they want to take part in a study or not. Many online questionnaires or review platforms use self-selected samples. Be wary of those Amazon reviews. | High |

9 Sources of bias: Sampling, outliers, normality and other ‘conundrums’

’Bias’ in your analysis is hardly ever a good thing unless you are a qualitative researcher. Whether you consider it positive or negative, we have to be aware of issues preventing us from performing a specific type of analysis. All of the statistical computations discussed in the following chapters can easily be affected by different sources of bias and, therefore, need to be discussed in advance. The lack of certain biases can be an assumption of particular statistical tests. Thus, violating these assumptions would imply that the analytical technique we use will produce wrong results, i.e. biased results. Field (2013) summarises three main assumptions we have to consider:

Linearity and additivity,

Independence,

Normality, and

Homogeneity of variance, i.e. homoscedasticity.

Most parametric tests require that all assumptions are met. If this is not the case, we have to use alternative approaches, i.e. non-parametric tests. The distinction is essential since parametric and non-parametric tests are based on different computational methods, leading to different outcomes.

Lastly, Field (2013) also mentions outliers as an important source of bias. Irrespective of whether your data fulfils the assumptions for parametric tests, outliers tend to be a significant problem. They usually lead to wrong results and, consequently, to misinterpretations of our findings. We will also cover how we can identify and handle such outliers in our data at the end of this chapter.

9.1 The origins of biases: A short introduction to sampling theory

While we might have a solid understanding that biases are an issue for quantitative researchers, we might wonder where they emerge from or whether they appear randomly to make our lives miserable. While there are many reasons why we have a biased dataset, two are particularly noteworthy. On the one hand, what might seem like a bias to us could be the nature of the population. Not every population follows a normal distribution (see Section 8.4.2 and Section 9.4) and not every relationship between variables is linear (see Section 9.2). So, no matter how carefully we choose our participants, we might still violate some of the assumptions for specific techniques.

On the other hand, and much more of a concern, are sampling strategies we pick to collect our data. This section will elaborate more on the issues of choosing a viable sampling strategy and why it matters. In many ways, it is the building block of any analysis. Only good and reliable data can produce good and reliable results.

Let’s take a step back and consider the purpose of our analysis. We primarily conduct analysis to identify patterns in our world that can hopefully be replicated across different contexts. We often conduct research based on a smaller group of people (i.e. a sample) to generalise to a much larger group of people (i.e. the population). Under most circumstances, it would be impossible for most studies to collect data from the entire population. Thus, we try to infer from our small sample how things are in the entire population. For this to work reliably, we need to ensure that our sample is sufficiently large to reflect the entire population. By ‘reflect’, I mean that the data is large enough to produce results that closely approximate what we would observe if data were collected from the entire population. Researchers often refer to this as the ‘representativeness’ of the sample. The lack of ‘representativeness’ is often referred to as ‘selection bias’ (see also (Lohr 2021)).

In order to achieve ‘representativeness,’ we need to ensure that our sample matches the characteristics of the population closely enough. A major factor that affects this is sampling strategy. The sampling strategy determines the method we use to select individuals from a population. Unfortunately, not all strategies are considered good choices and introduce various degrees of selection bias. Bear in mind, in qualitative research, selection bias is not a real issue, and strategies that help identify participants that could valuable contribute to the study would always be given preference over randomly selecting people for the sake of representativeness. Table 9.1 briefly summarises some of the most common sampling strategies deployed in Social Sciences and their likelihood of introducing selection bias.

While all this might sound terribly theoretical, let me provide you with an example. Imagine we are interested in understanding how much time students spend on their weekly readings in our department. Also, assume that our department consists of 1000 students. We now wish to draw a sample of students for our analysis.

The dataset reading_time from the r4np package contains all the information we are after. Since the dataset contains information about the entire population we can identify important characteristics of our population which we later can compare against our sample. We can use the trusty count() function to obtain information about distributions in our population, for example for gender.

# Gender distribtuion

reading_time |>

count(gender) |>

mutate(perc = n/sum(n))# A tibble: 3 × 3

gender n perc

<chr> <int> <dbl>

1 female 450 0.45

2 male 450 0.45

3 non-binary 100 0.1 We can see that in our department 45% of students identify as female, 45% as male and 10% as non-binary. Consequently, when we draw a sample from this population we would ideally wish to end up with a similar distribution. The percentages mentioned here are also indicative of how likely someone will be selected based on gender, i.e. there is only a 10% chance that we pick a student who identifies as non-binary, while there is an even chance of 45% to pick a female or male student.

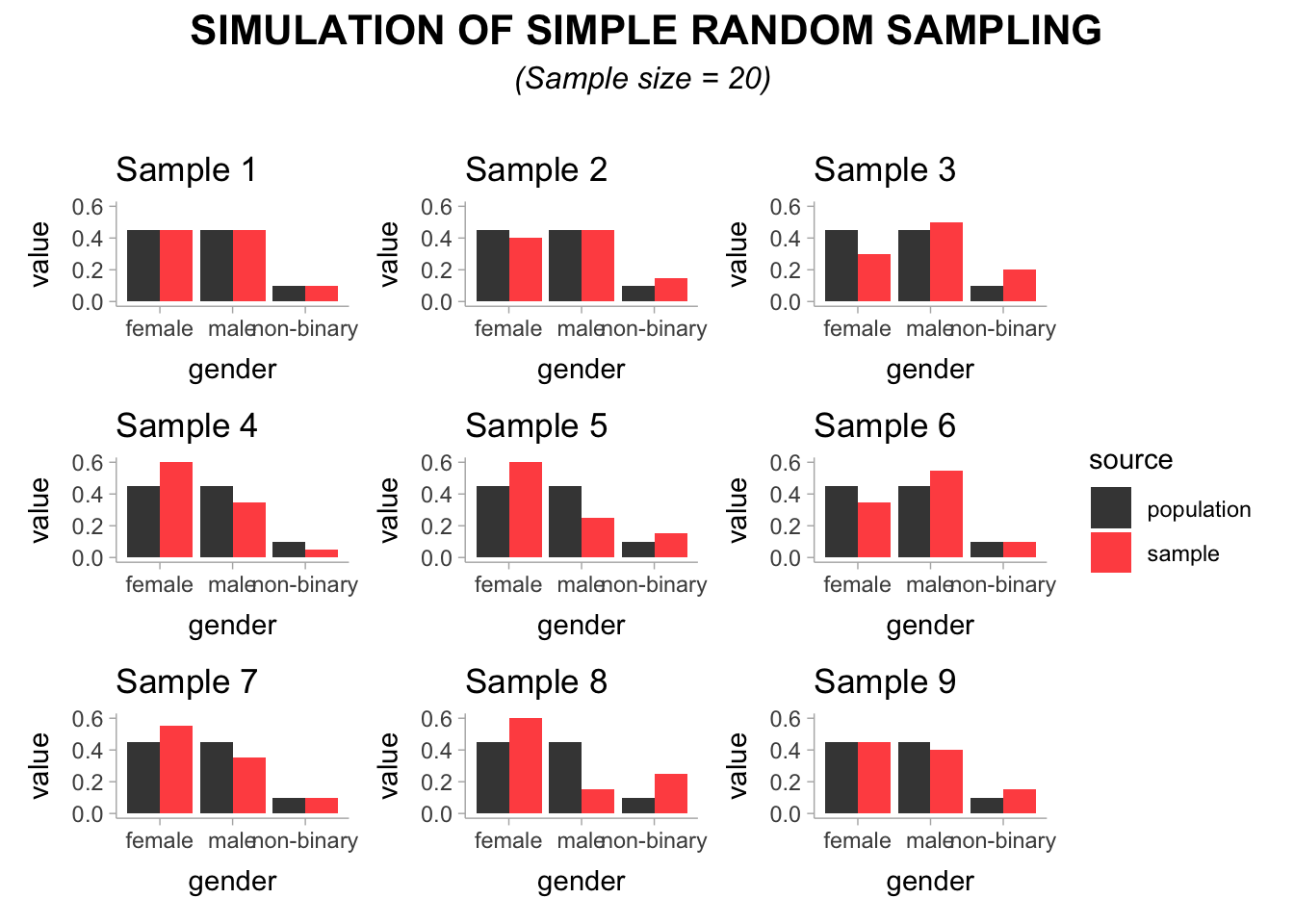

Now that we know how our sample should look like with regards to gender we can start selecting people. Let’s begin by drawing nine samples of 20 students.

Looking at the visualisation, we can see the distribution of gender in our sample in red compared to the one in our population coloured in grey. You might be surprised to see that not all samples seem equally good in being representative even though we used simple random sampling. Sample 1, Sample 2, and Sample 9 seem to match the gender distribution of our population fairly well. However, Sample 4, Sample 5 and Sample 8 are rather poor representations of our population. Thus, only 3 out of 9 samples would provide us with a dataset that is somewhat representative.

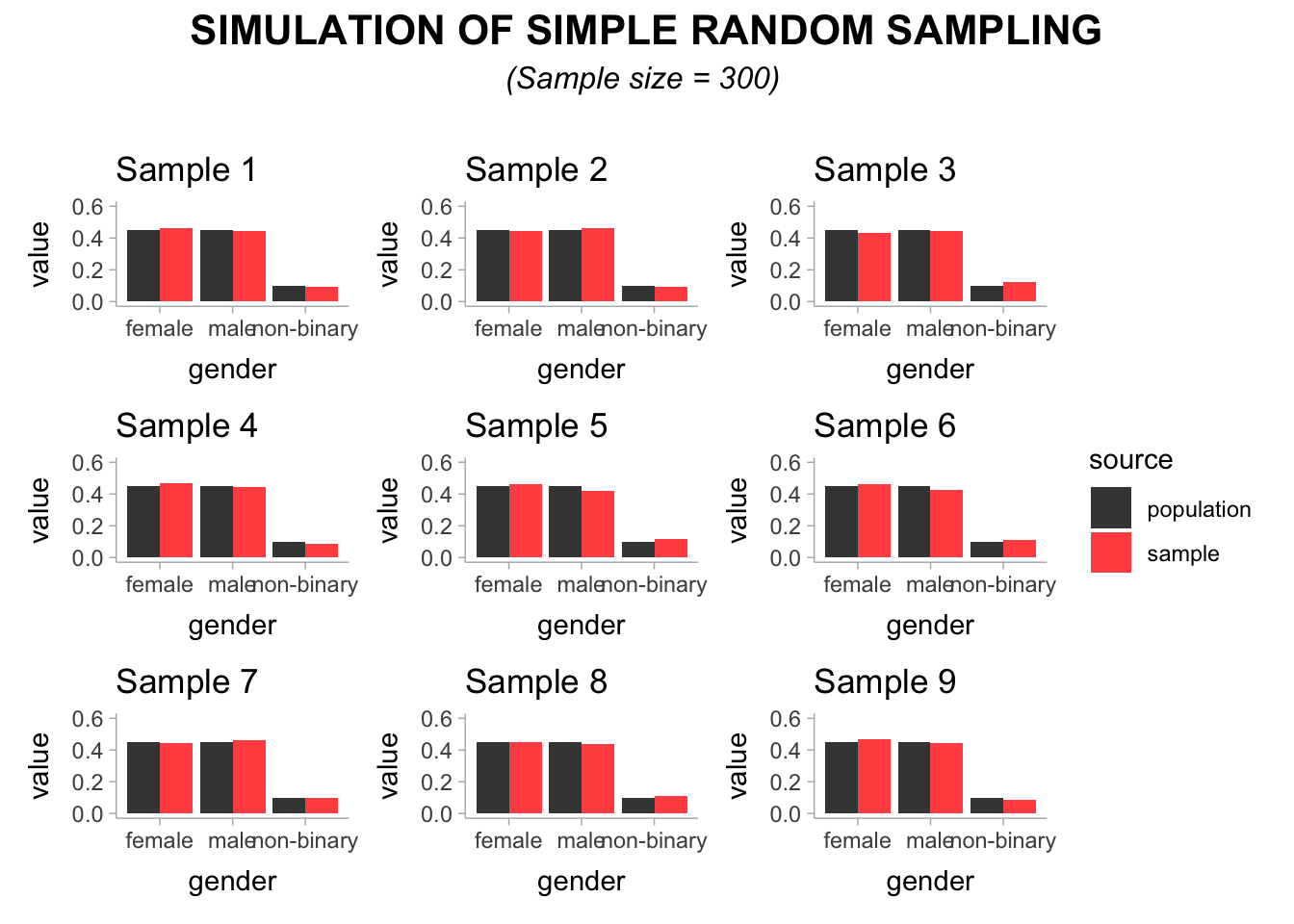

An attentive reader might wonder: Is a sample of 20 students not too small? And the answer would be a resounding ‘yes’. Even with simple random sampling, we would have to achieve a large enough sample to ensure no matter how often we draw a sample, we end up with a dataset that is representative enough of our population. Let’s draw nine more samples, but this time we opt to select 300 students for each sample.

This time, all of our 9 samples seem to represent the population very well with regards to the gender distribution. In short: your sampling strategy and sample size matter significantly to achieve a dataset which can provide insights into the chosen population. This is true irrespective of whether correct analytical techniques were chosen to analyse the data afterwards, which of course would still produce wrong results.

Lastly, let me show you how results could differ when samples are not comparable by considering the mean and median reading time (min_reading) by students in our department. I made a couple more modifications to enable us to read the final results better. So don’t feel irritated by the code I am showing you below.

# Reading time based on entire population (n = 1000)

population <- reading_time |>

summarise(mean = mean(min_reading),

median = median(min_reading)) |>

mutate(sample_type = "population",

.before = everything())

# Reading time based on representative sample (n = 300)

set.seed(123456)

rep_sample_300 <-

reading_time |>

slice_sample(n = 300) |>

summarise(mean = mean(min_reading),

median = median(min_reading)) |>

mutate(sample_type = "representative sample (n = 300)",

.before = everything())

# Reading time based on representative sample (n = 20)

set.seed(123456)

rep_sample_20 <-

reading_time |>

slice_sample(n = 20) |>

summarise(mean = mean(min_reading),

median = median(min_reading)) |>

mutate(sample_type = "representative sample (n = 20)",

.before = everything())

# Reading time based on non-representative sample (n = 20)

set.seed(123585)

non_rep_sample <-

reading_time |>

slice_sample(n = 20) |>

summarise(mean = mean(min_reading),

median = median(min_reading)) |>

mutate(sample_type = "non-representative sample (n = 20)",

.before = everything())

comparison <- rbind(population, rep_sample_300,

rep_sample_20, non_rep_sample)

comparison# A tibble: 4 × 3

sample_type mean median

<chr> <dbl> <dbl>

1 population 362. 359

2 representative sample (n = 300) 364. 364.

3 representative sample (n = 20) 354. 376

4 non-representative sample (n = 20) 335. 328.In light of everything we covered in this chapter, it is not surprising to find that the representative samples are much closer to the true mean and median of the population than the non-representative one. In addition, the bigger representative sample also performed much better in capturing the central tendency of min_reading in our population than the smaller one.

In conclusion, even with the right analytical tools, our results depend heavily on how we selected a sample from the population to ensure it represents the group we are interested in. This highlights the importance of clearly defining your population as a crucial first step in the process. Consequently, choosing a sampling strategy that leads to less representative datasets implies that your findings are likely not applicable to people outside your sample. While this is not necessarily a problem, it is a limitation that you should be transparent about.

9.2 Linearity and additivity

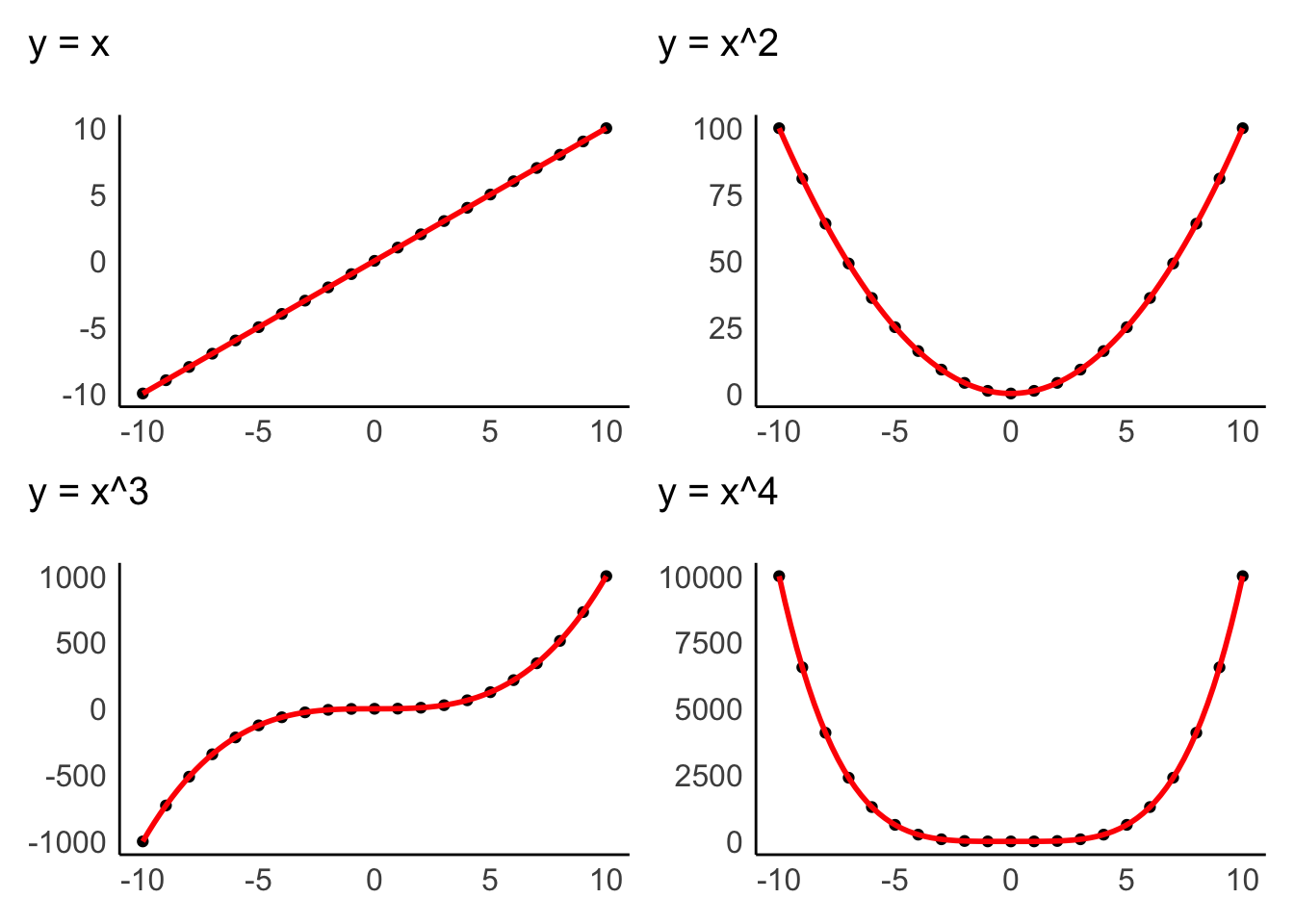

The assumption of linearity postulates that the relationship of variables represents a straight line and not a curve or any other shape. Figure 9.1 depicts examples of how two variables could be related to each other. Only the first one demonstrates a linear relationship, and all other plots would represent a violation of linearity.

Data visualisations are particularly useful to identify whether variables are related to each other in a linear fashion. The examples above were all created with geom_point(), which creates a dot plot that maps the relationship between two variables. In Chapter 10, we will look more closely at the relationship of two variables in the form of correlations, which measure the strength of a linear relationship between variables.

Additivity is given when the effects of all independent variables can be added up to obtain the total effect they have on a dependent variable. In other words, the effect that multiple variables have on another variable can be added up to reflect their total effect.

If we assume that we have a dependent variable \(Y\) which is affected by other (independent) variables \(X\), we could summarise additivity and linearity as a formula:

\(Y = \beta_{1} * X_1 + \beta_{2} * X_{2} + ... + \beta_{n} * X_{n}\)

The \(\beta\) (beta) stands for the degree of change in a variable \(X\) causes in \(Y\). Or, in simpler terms, \(\beta\) reflects the impact an independent variable has on the dependent variable. We will return to this equation and terminology in Chapter 13, where we create a linear model via regression.

9.3 Independence

The notion of independence is an important one. It assumes that each observation in our dataset is independent of other observations. For example, imagine my wife Fiona and I take part in a study that asks us to rank movies by how much we like them. Each of us has to complete the ranking by ourselves, but since we both sit in the same living room, we start chatting about these movies. By doing so, we influence each other’s rankings and might even agree on the same ranking. Thus, our scores are not independent from each other. On the other hand, suppose we were both sitting in our respective offices and rank these movies. In that case, the rankings could potentially still be very similar, but this time the observations are independent of each other.

There is no statistical measurement or plot that can tell us whether observations are independent or not. Ensuring independence is a matter of data collection and not data analysis. Thus, it depends on how you, for example, designed your experiment, or when and where you ask participants to complete a survey, etc. Still, this criterion should not be downplayed as being a ‘soft’ one, just because there is no statistical test, but should remain on your radar throughout the planning and data collection stage of your research.

9.4 Normality

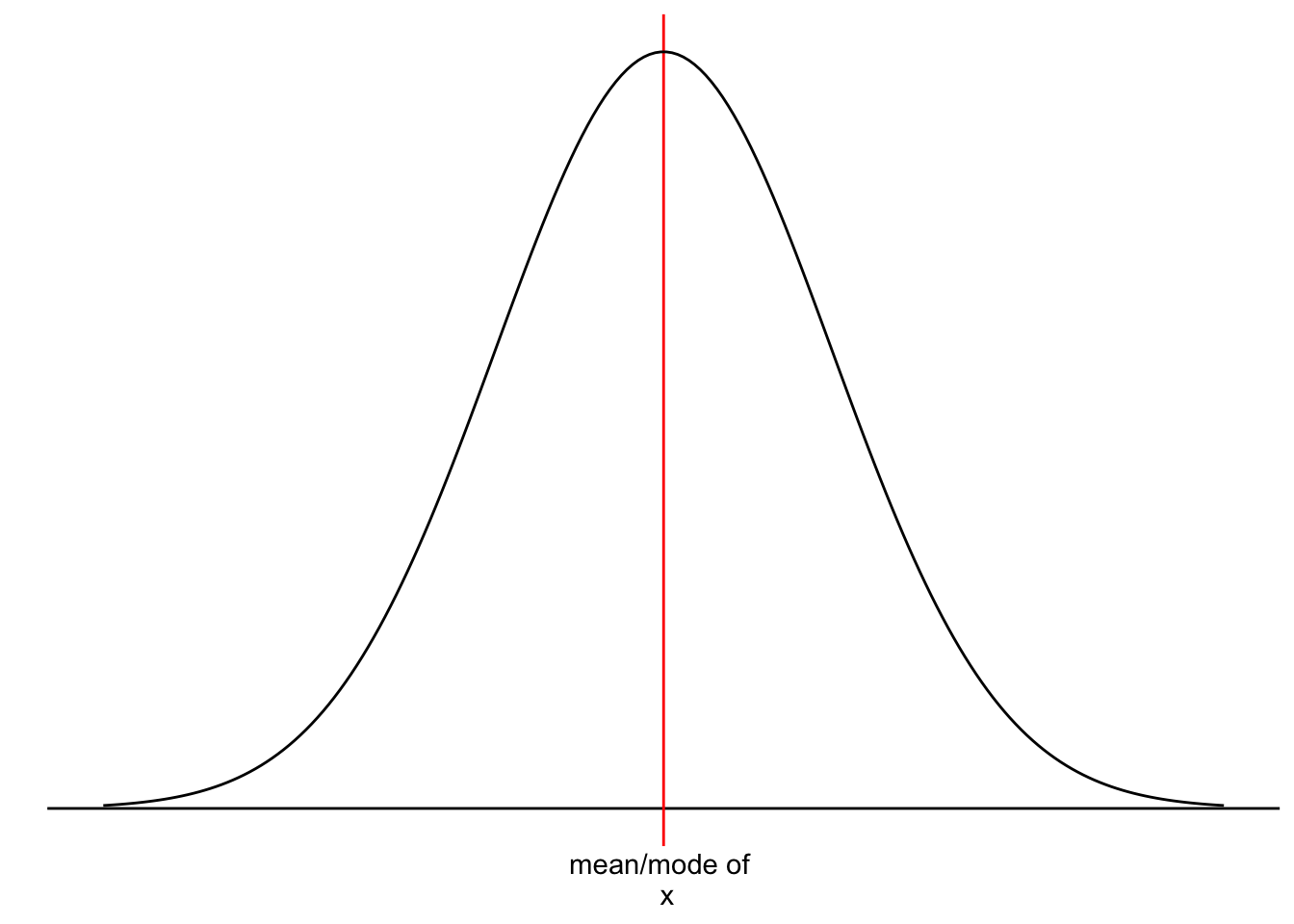

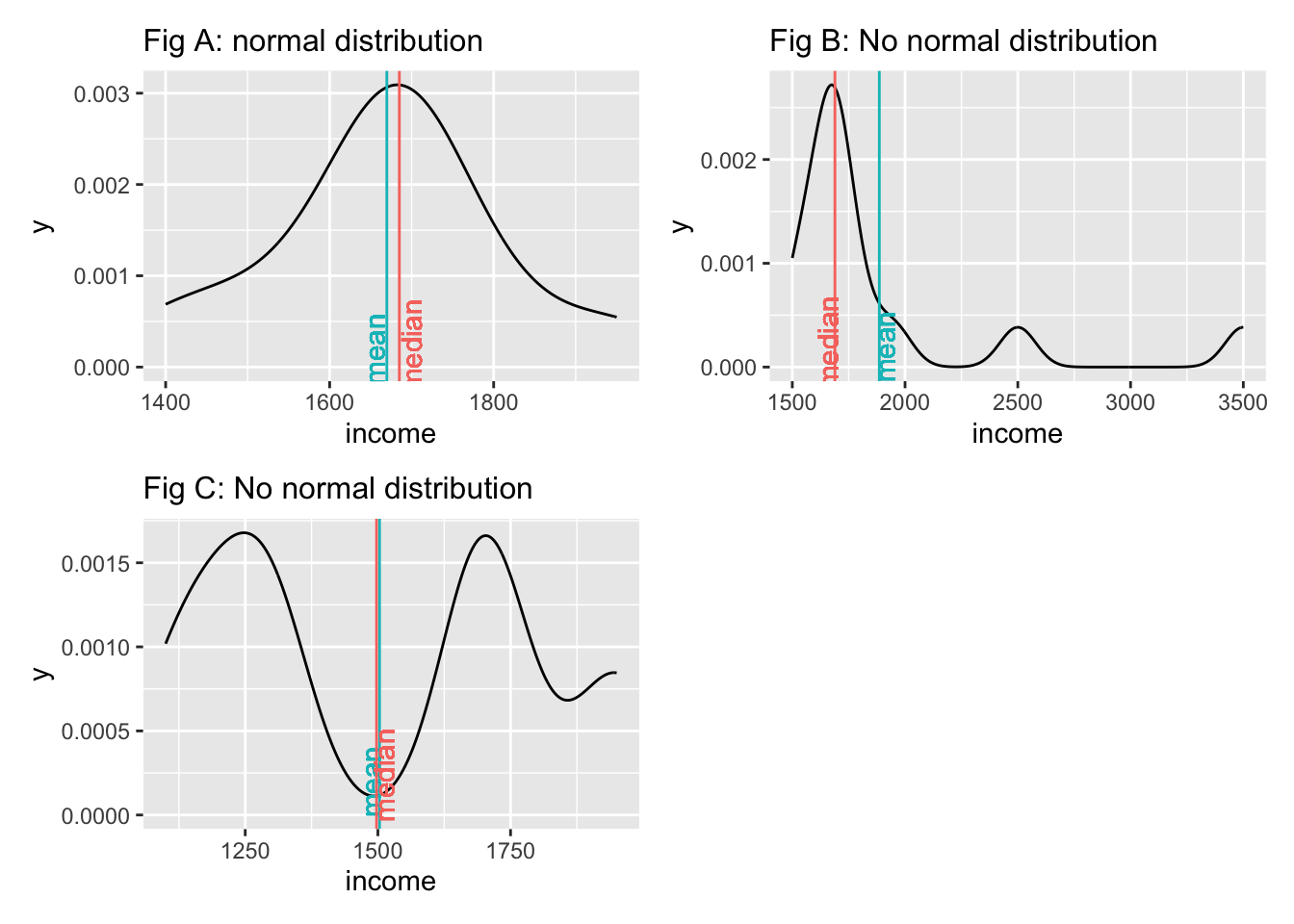

We touched upon the notion of ‘normality’ and ‘normal distributions’ before in Section 8.4 because it refers to the spread of our data. Figure 9.2 should look familiar by now.

However, we have yet to understand why it is essential that our data follows a normal distribution. Most parametric tests are based on means. For example, if we want to compare two groups with each other, we would compute the mean for each of them and then see whether their means differ from each other in a significant way (see Chapter 11. Of course, if our data is not very normally distributed, means are a poor reference point for most of the observations in this group. We already know that outliers heavily affect means, but even without outliers, the mean could be a poor choice. Let me provide some visual examples.

We notice that neither the median nor the mean by themselves is a reliable indicator for normality. Figure A and Figure C both show that the median and mean are almost identical, but only Figure A follows a normal distribution. The median and mean in Figure C are not reflective of the average observation in this dataset. Most scores lie below and above the mean/median. Therefore, when we analyse the normality of our data, we usually are not interested in the normality of a single variable but the normality of the sampling distribution. However, we cannot directly assess the sampling distribution in most cases. As such, we often revert to testing the normality of our data. There are also instances where we would not expect a normal distribution to exist. Consider the following plots:

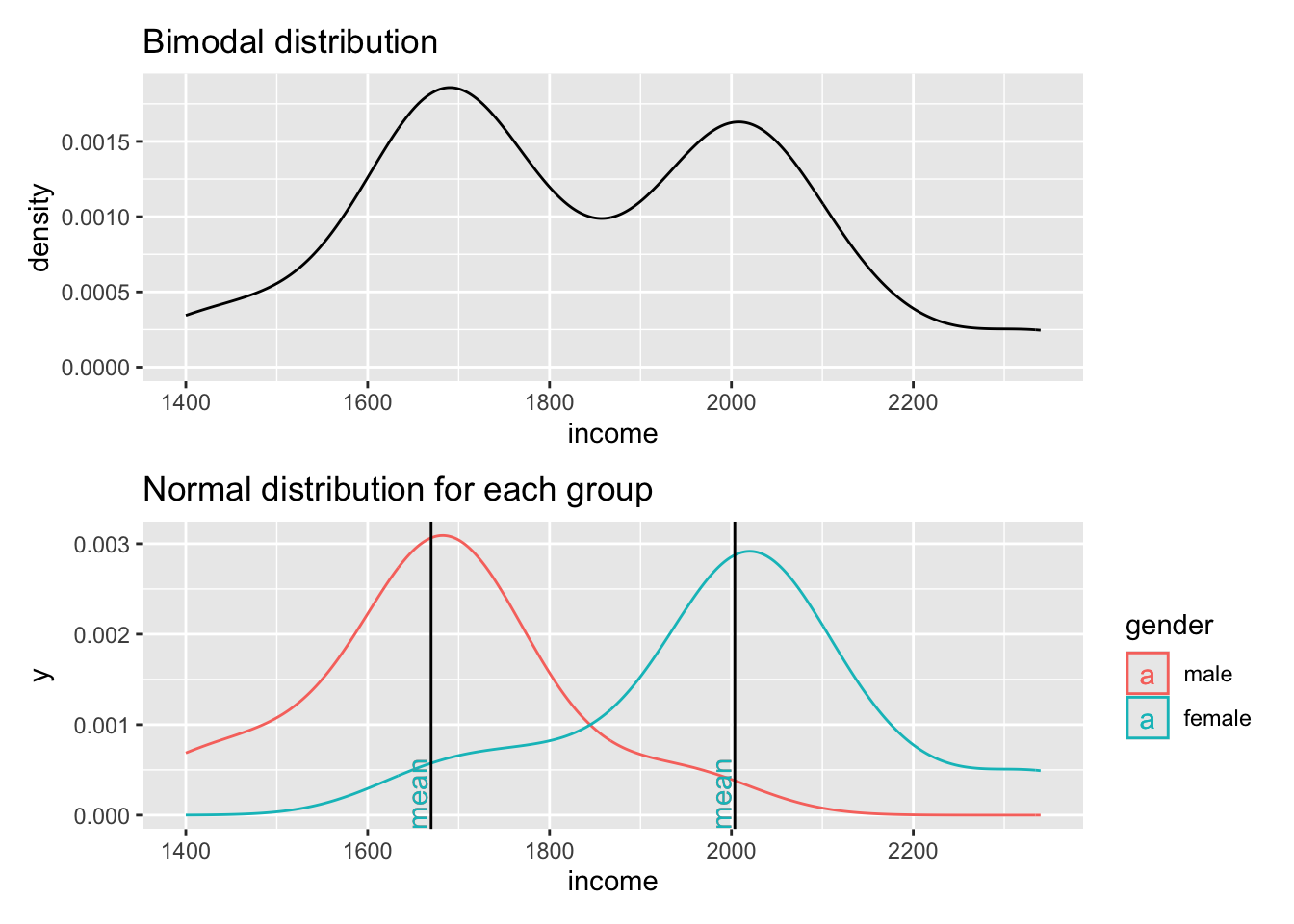

The first plot clearly shows that data is not normally distributed. If anything, it looks more like the back of a camel. The technical term for this distribution is called ‘bimodal distribution’. In cases where our data has even more peaks we consider it as a ‘multimodal distribution’. If we identify distributions that look remotely like this, we can assume that there must be another variable that helps explain why there are two peaks in our distribution. The plot below reveals that gender appears to play an important role. Drawing the distribution for each subset of our data reveals that income is now normally distributed for each group and has two different means. Thus, solely focusing on normal distributions for a single variable would not be meaningful if you do not consider the impact of other variables. If we think that gender plays an important role to understand income levels, we would have to expect that the distribution is not normal when looking at our data in its entirety.

Determining whether data is normally distributed can be challenging when only inspecting plots, e.g. histograms, density plots or QQ plots. Luckily, there is also a statistical method to test whether our data is normally distributed: The Shapiro-Wilk test. This test compares our distribution with a normal distribution (like in our plot) and tells us whether our distribution is significantly different from it. Thus, if the test is not significant, the distribution of our data is not significantly different from a normal distribution, or in simple terms: It is normally distributed. We can run the test in R as follows for the dataset that underpins Fig A above:

shapiro.test(data$income)

Shapiro-Wilk normality test

data: data$income

W = 0.94586, p-value = 0.5916This result confirms that the data is normally distributed, because it is not significantly different (\(p > 0.05\)). The chapter on correlations looks at significance and its meaning more thoroughly (see Section 10.3).

In terms of computing normality, it can become very troublesome, very quickly. When we compare different groups of people (like in Section 11.3.1) we have to check normality for each group and each variable of interest separately, which means we first need to create subsets of our data for each group and then perform shapiro.test() separately for each group. This might be still manageable with two or three groups, but can become very time-consuming if there are more groups in a larger dataset. Luckily, I included some helpful functions in r4np that can help make this a lot easier:

ntest(): This function allows to compute multipleshapiro.test()s for multiple variables.ntest_by(): This function allows to compute multipleshapiro.test()s for multiple variables based on a group variable.

Let me show you how these two functions are extremely handy when testing normality in bulk.

# Perform 'shapiro.test()' for multiple variables at once

ic_training |> ntest(cols = c(communication,

teamwork,

leadership))# A tibble: 3 × 4

row_name statistic p.value method

<chr> <dbl> <dbl> <chr>

1 communication 0.970 0.0255 Shapiro-Wilk normality test

2 teamwork 0.963 0.00890 Shapiro-Wilk normality test

3 leadership 0.962 0.00702 Shapiro-Wilk normality testHere is an example where we compute the normality for all three variables again, but this time we consider two different subsets of our dataset.

# Perform 'shapiro.test()' for multiple variables and groups

ic_training |> ntest_by(cols = c(communication,

teamwork,

leadership),

group = test

)# A tibble: 6 × 4

test variable statistic p.value

<fct> <chr> <dbl> <dbl>

1 pre_training communication 0.978 0.517

2 pre_training teamwork 0.950 0.0398

3 pre_training leadership 0.957 0.0747

4 post_training communication 0.924 0.00415

5 post_training teamwork 0.944 0.0240

6 post_training leadership 0.937 0.0124 I use the ntest_by() function regularly, because it saves so much time and does not require me to create subsets of my data. It is probably the most useful analytical function I have ever written (to date).

In conclusion, the normality of data is an essential pre-test for any of our studies. If we violate the assumption of normality, we will have to fall back to non-parametric tests. However, this rule has two exceptions: The Central Limit Theorem and using ‘robust’ measures of parametric tests. The Central Limit Theorem postulates that as our sample becomes larger, our sampling distribution becomes more and more normal around the mean of the underlying population. For example, Field (2013) (p.54) refers to a sample of 30 as a common rule of thumb. As such, it is possible to assume normality for larger datasets even though our visualisation and the Shapiro-Wilk test tell us otherwise. The second exception is that many parametric tests offer a ‘robust’ alternative, often via bootstrapping. Bootstrapping refers to the process of subsampling your data, for example, 2000 times, and look at the average outcome.

Admittedly, this raises the question: Can I ignore normality and move on with my analysis if my sample is large enough or I use bootstrapping? In short: Yes. This fact probably also explains why we hardly ever find results from normality tests in journal publications since most Social Science research involves more than 30 participants. However, if you find yourself in a situation where the sample size is smaller, all of the above needs to be checked and thoroughly considered. However, the sample size also has implications with regards to the power of your test/findings (see Chapter 12). Ultimately, the answer to the above questions remains, unsatisfyingly: ‘It depends’.

9.5 Homogeneity of variance (homoscedasticity)

The term ‘variance’ should sound familiar, because we mentioned it in Section 8.4.6 where we looked at the standard deviation derived from the variance.

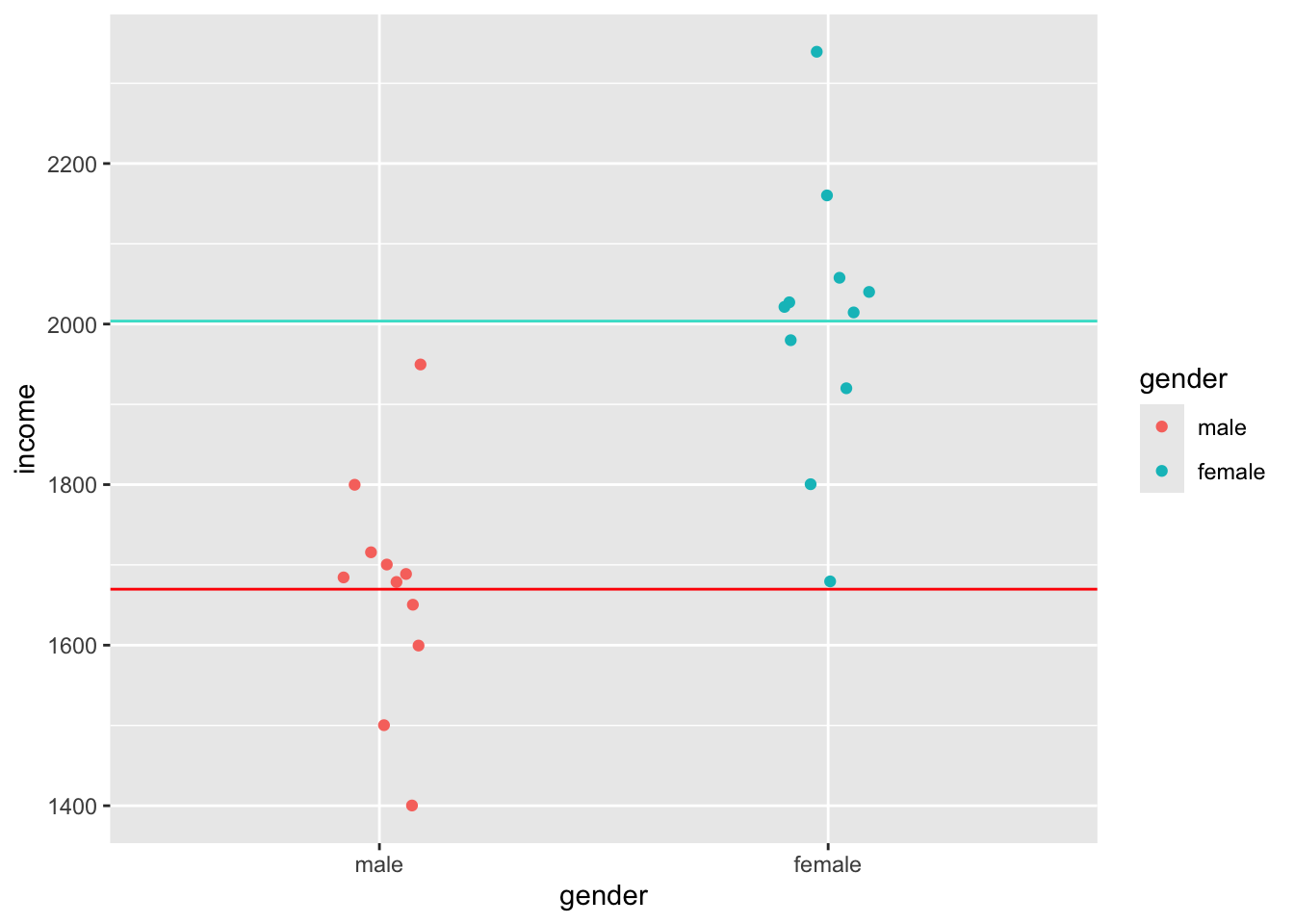

Homogeneity of variance implies that the variance of, for example, two subsets of data, is equal or close to being equal. Let’s look at how close the observed values are to the mean for the two groups identified in Figure 9.3.

data4 |>

ggplot(aes(x = gender, y = income, col = gender)) +

geom_jitter(width = 0.1) +

geom_hline(yintercept = group_means$mean[1], color = "red") +

geom_hline(yintercept = group_means$mean[2], color = "turquoise")

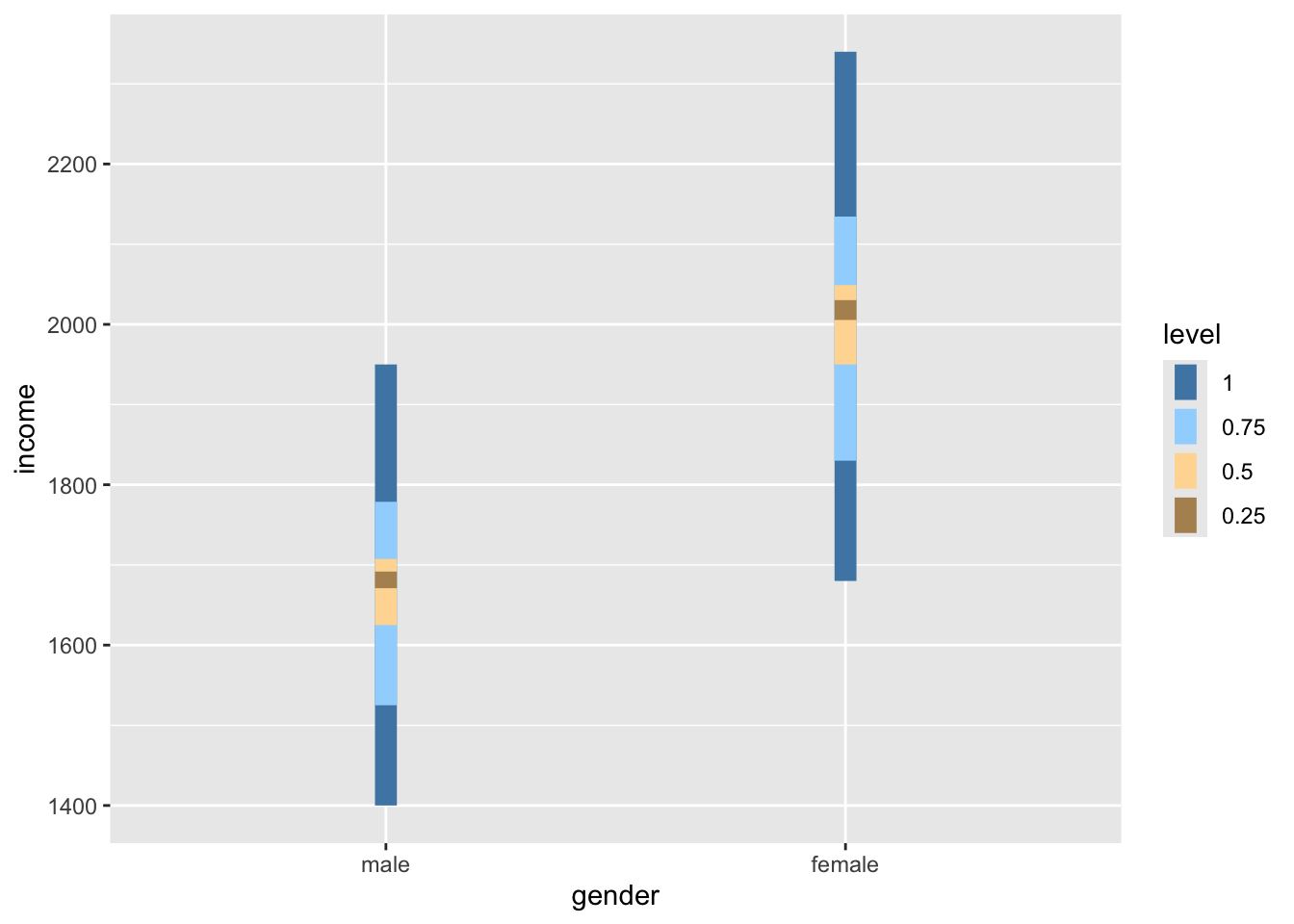

Judging by eye, we could argue that most values lie around the mean for each respective group. However, some observations are a bit further off. Still, using this visualisation, it is tough to judge whether the spread is about the same. However, boxplots can help with this, or even better, a boxplot reflected by a bar. The package ggdist has an excellent plotting function called stat_interval(), which allows us to show a boxplot in the form of a bar.

data4 |>

ggplot(aes(x = gender, y = income, group = gender)) +

ggdist::stat_interval(aes(y = income),

.width = c(0.25, 0.5, 0.75, 1)) +

# Let's add some nice complementary colours

scale_color_manual(values = c("#4D87B3", "#A1D6FF",

"#FFDAA1", "#B3915F"))

If we compare the bars, we can tell that the variance in both groups looks very similar, i.e. the length of the bars appear to be about the same height. Furthermore, if we compare the IQR for both groups, we find that they are quite close to each other.

data4 |>

group_by(gender) |>

summarise(iqr = IQR(income))# A tibble: 2 × 2

gender iqr

<fct> <dbl>

1 male 82.5

2 female 99 However, to test whether the variance between these two groups is truly similar or different, we have to perform a Levene’s test. The Levene’s test follows a similar logic as the Shapiro-Wilk test. If the test is significant, i.e. \(p < 0.05\), we have to assume that the variances between these groups are significantly different from each other. However, if the test is not significant, then the variances are similar, and we can proceed with a parametric test - assuming other assumptions are not violated. The interpretation of this test follows the one for the Shapiro-Wilk test. To compute the Levene’s test we can use the function leveneTest() from the car package. However, instead of using , to separate the two variables, this function expects a formula, which is denotated by a ~. We will cover formulas in the coming chapters.

car::leveneTest(gep$age ~ gep$gender)Levene's Test for Homogeneity of Variance (center = median)

Df F value Pr(>F)

group 2 0.8834 0.4144

297 The Levene’s test shows that our variances are similar and not different from each other because \(p > 0.05\). This is good news if we wanted to continue and perform a group comparison, like in Chapter 11.

9.6 Outliers and how to deal with them

In Chapter 8, I referred to outliers many times but never eluded to the aspects of handling them. Dealing with outliers is similar to dealing with missing data. However, it is not quite as straightforward as one might think.

In a first step, we need to determine which values count as an outlier. Aguinis, Gottfredson, and Joo (2013) reviewed 232 journal articles and found that scholars had defined outliers in 14 different ways, used 39 different techniques to detect them and applied 20 different strategies to handle them. It would be impossible to work through all these options in this book. However, I want to offer two options that have been frequently considered in publications in the field of Social Sciences:

The standard deviation (SD), and

The inter-quartile range (IQR).

9.6.1 Detecting outliers using the standard deviation

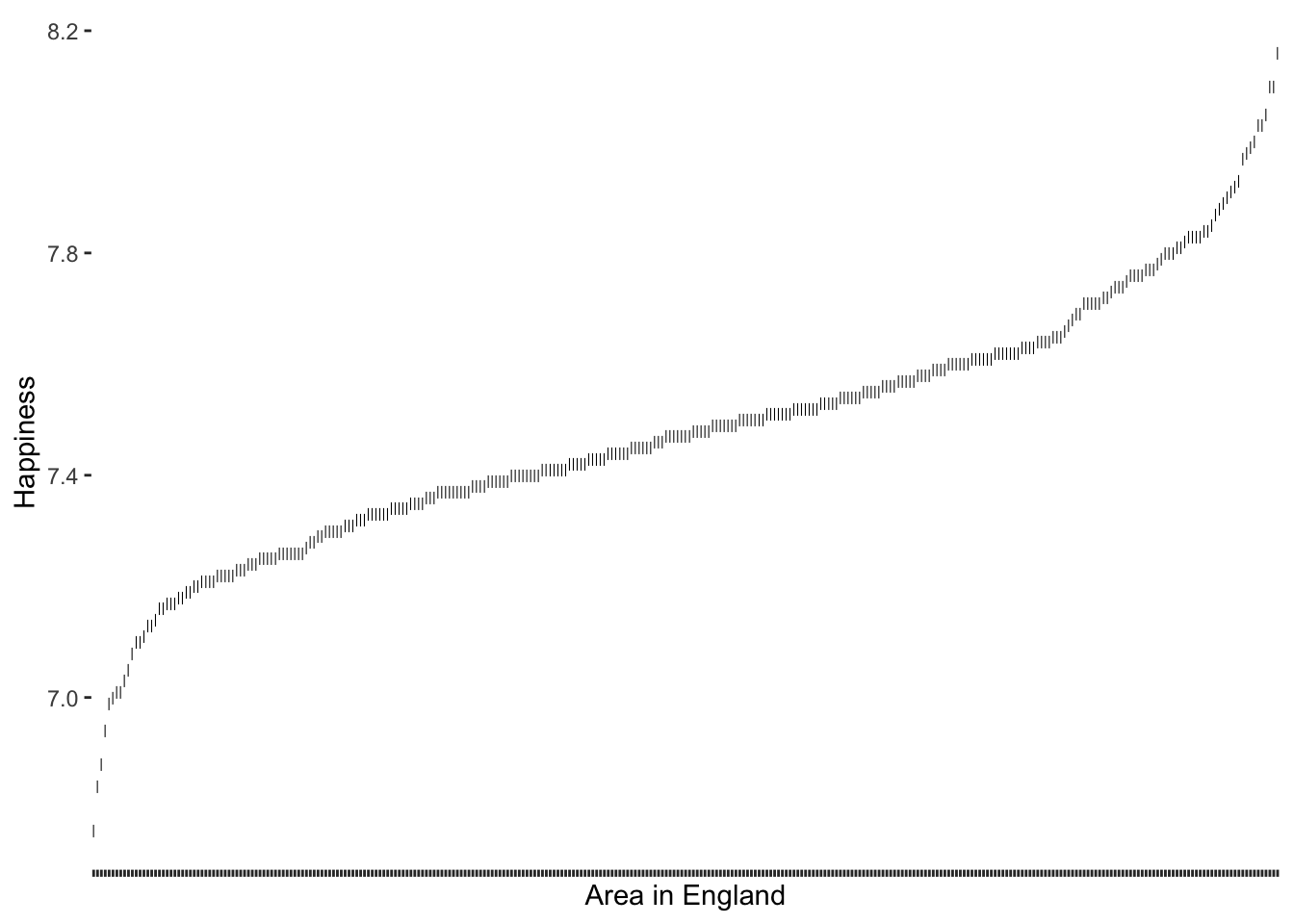

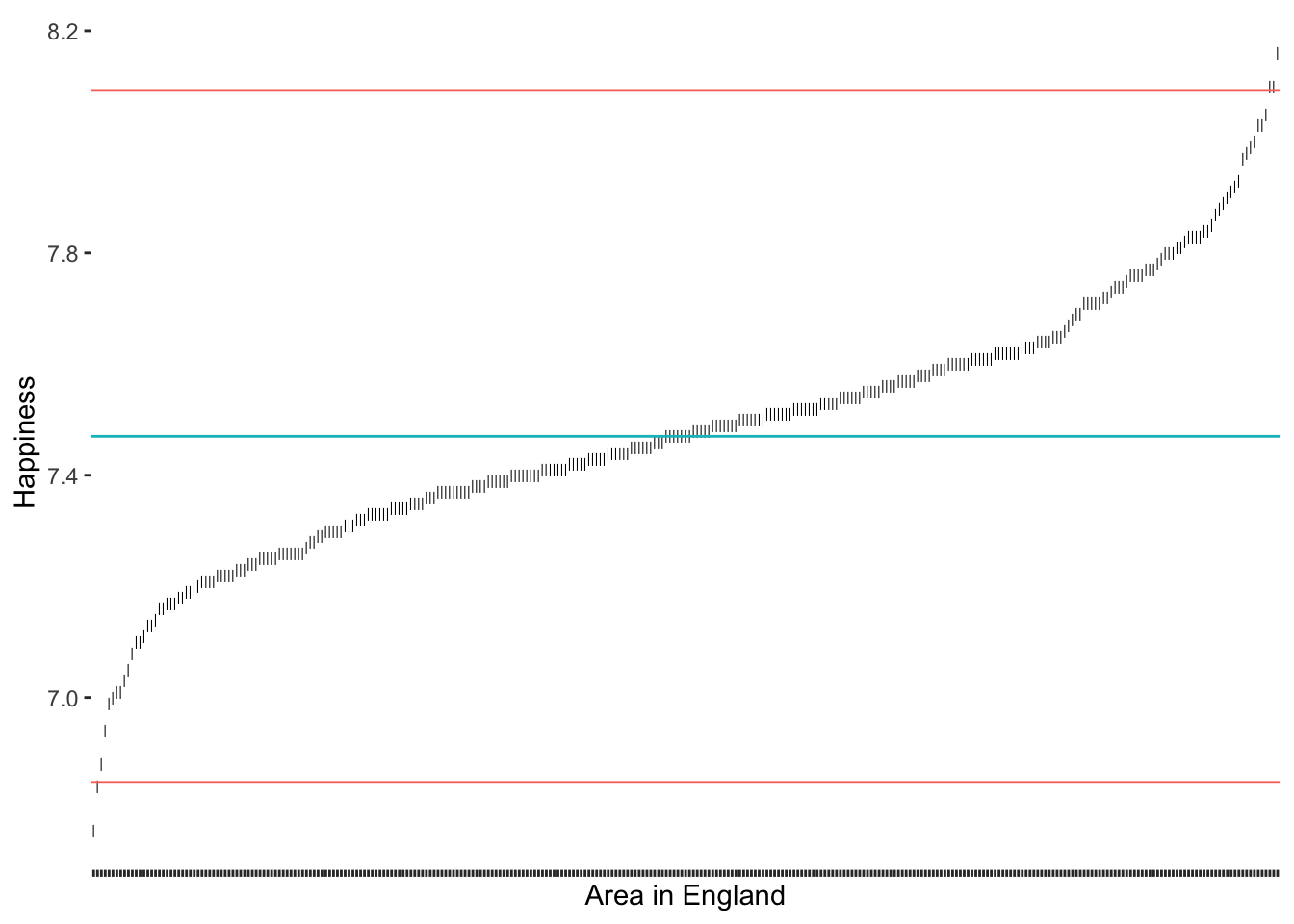

Let us assume we are interested in understanding whether there are certain areas in England that score particularly high/low on happiness and whether the majority of areas report levels around the mean. This is a deviation analysis similar to our previous plot regarding alcohol consumption (see Figure 8.10). We can simply adapt it to our new research aim. By using the hie_2021 we can first recreate the same plot. As before, I will create a base plot outlier_plot first so that we do not have to repeat the same code over and over again. We then use outlier_plot and add more layers as we see fit.

# Create the base plot

outlier_plot <-

hie_2021 |>

select(area_name, happiness) |>

ggplot(aes(x = reorder(area_name, happiness),

y = happiness)) +

geom_point(shape = 124) +

theme(axis.text.x = element_blank(),

panel.grid.major = element_blank(),

panel.background = element_blank()

) +

xlab("Area in England") +

ylab("Happiness")

outlier_plot

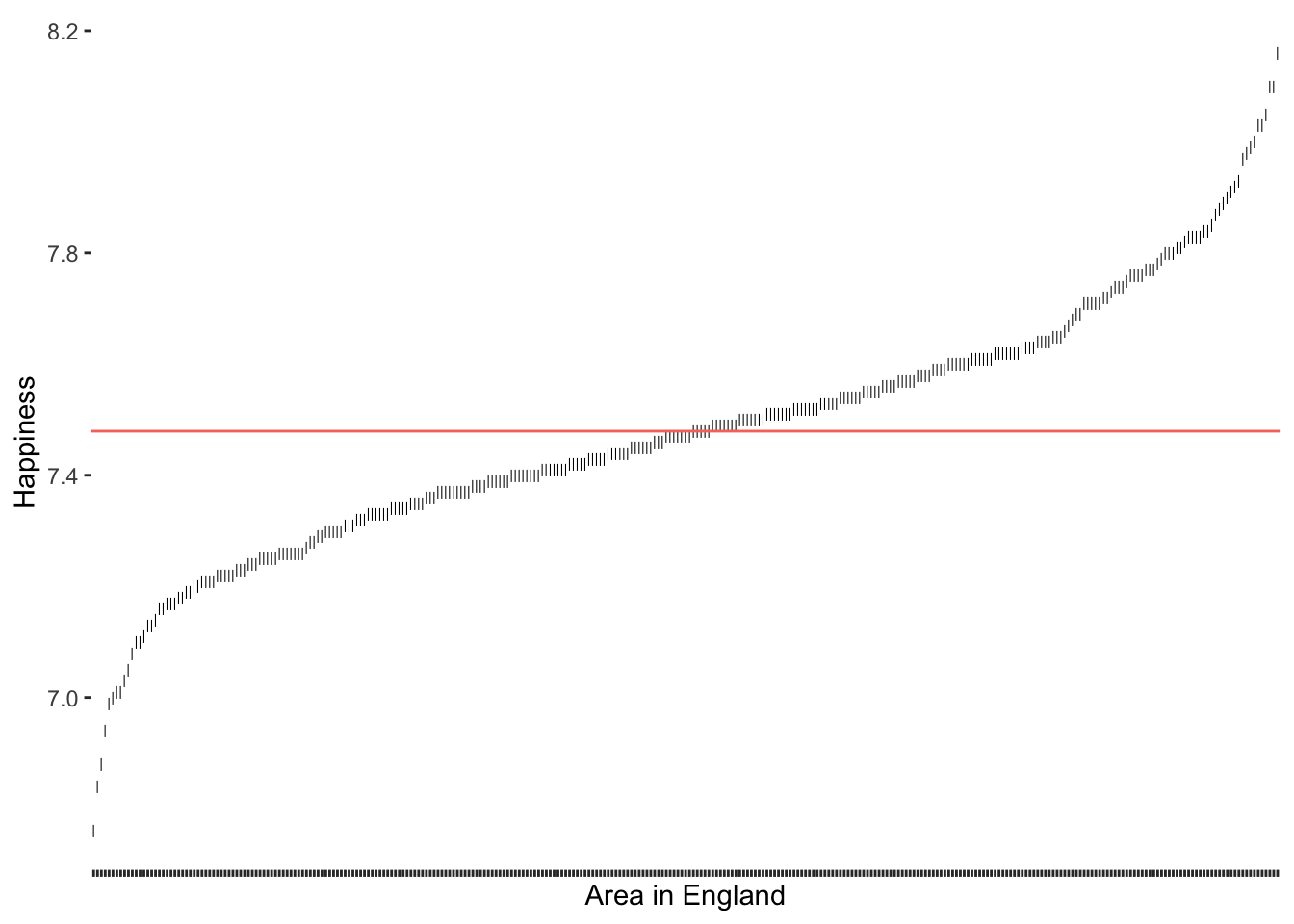

Now we can compute the mean of happiness and add it as a new layer to our existing plot.

# Compute the mean

happiness_mean <- mean(hie_2021$happiness)

# Add the mean reference line

outlier_plot +

geom_hline(aes(yintercept = happiness_mean, col = "red"),

show.legend = FALSE)

We can see that our distribution follows an S-curve and there seem to be some areas that score very high and some that score very low on happiness. Those observations at both ends of this plot which seem further away from the rest could likely be outliers. So, how can we determine which observations are truly outliers?

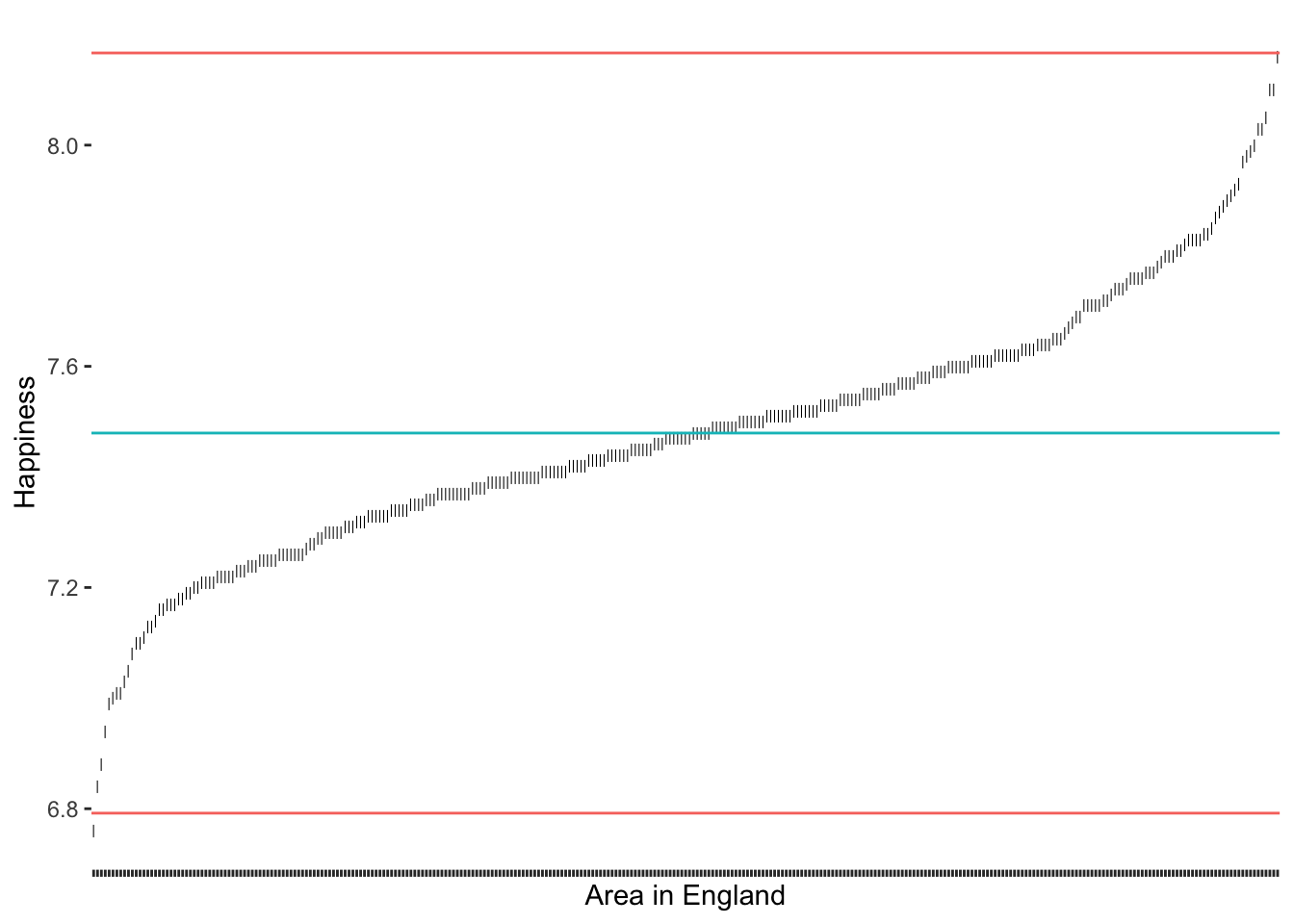

A very frequently used approach to detecting outliers is the use of the standard deviation. Usually, scholars use multiples of the standard deviation to determine thresholds. For example, a value that lies 3 standard deviations above or below the mean could be categorised as an outlier. Unfortunately, there is quite some variability regarding how many multiples of the standard deviation counts as an outlier. Some authors might use 3, and others might settle for 2 (see also Leys et al. (2013)). Let’s stick with the definition of 3 standard deviations to get us started.

# Compute the mean and the thresholds

happiness_mean <- mean(hie_2021$happiness)

sd_upper <- happiness_mean + 3 * sd(hie_2021$happiness)

sd_lower <- happiness_mean - 3 * sd(hie_2021$happiness)

# Add the thresholds and mean

outlier_plot +

geom_hline(aes(yintercept = happiness_mean, col = "red"),

show.legend = FALSE) +

geom_hline(aes(yintercept = sd_upper, col = "blue"),

show.legend = FALSE) +

geom_hline(aes(yintercept = sd_lower, col = "blue"),

show.legend = FALSE)

3 * SD as a thresholdThe results suggest that only very few outliers would be detected if we chose these thresholds, i.e. one at the very bottom that sits right under the red line. However, what would we find if we applied the stricter threshold of 2?

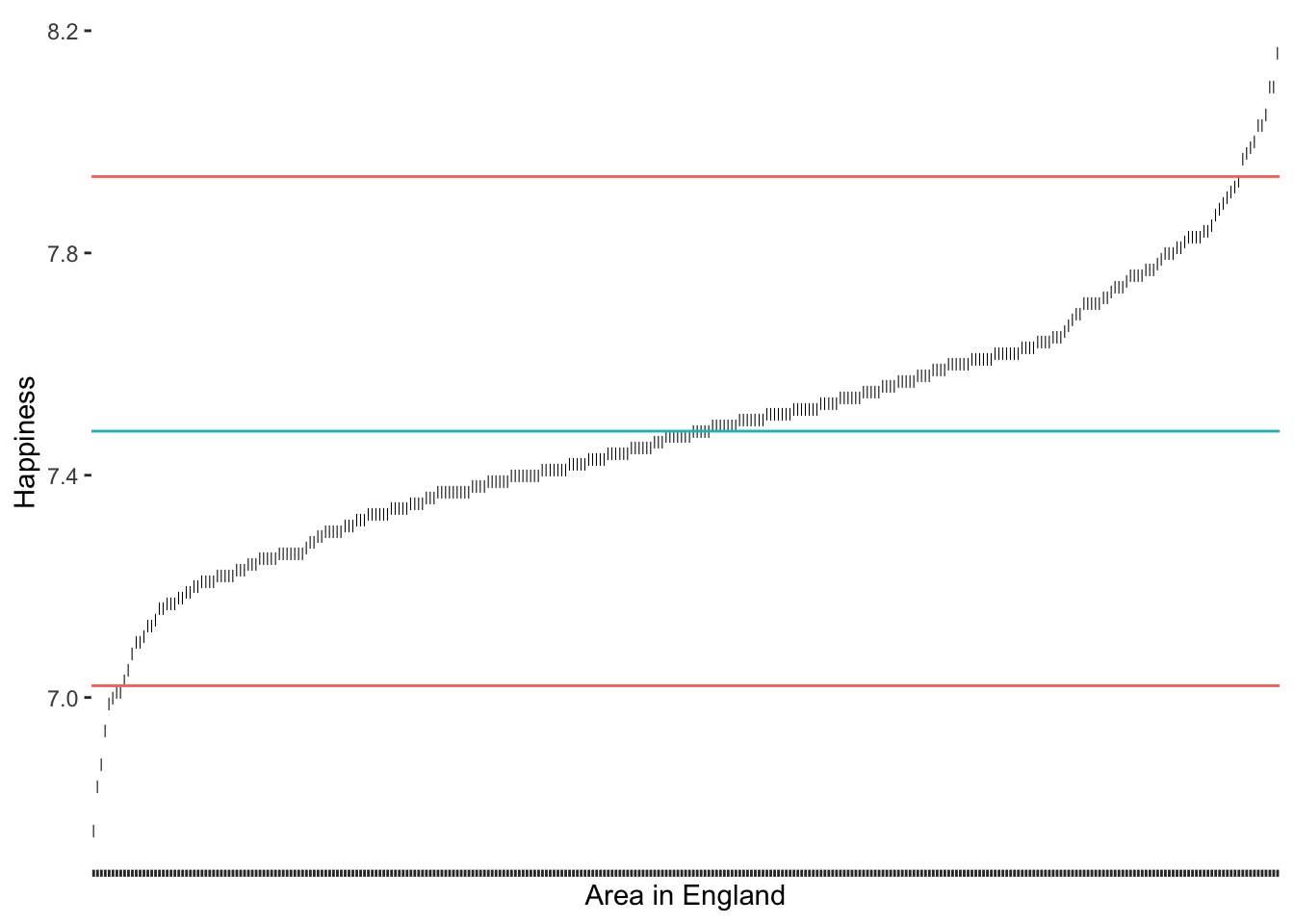

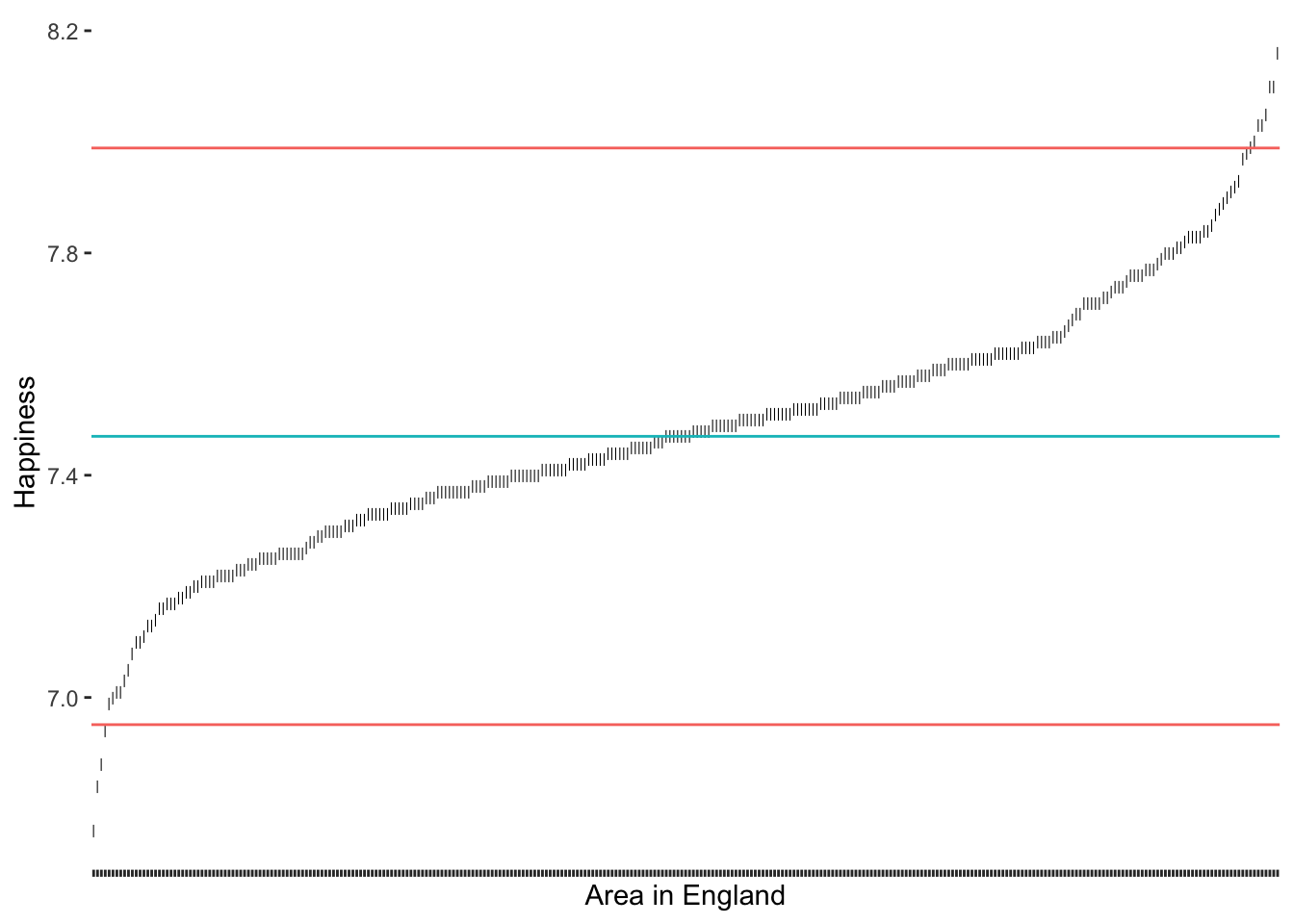

# Compute the standard deviation

sd_upper <- happiness_mean + 2 * sd(hie_2021$happiness)

sd_lower <- happiness_mean - 2 * sd(hie_2021$happiness)

# Create our plot

outlier_plot +

geom_hline(aes(yintercept = happiness_mean, col = "red"),

show.legend = FALSE) +

geom_hline(aes(yintercept = sd_upper, col = "blue"),

show.legend = FALSE) +

geom_hline(aes(yintercept = sd_lower, col = "blue"),

show.legend = FALSE)

2 * SD as a thresholdAs we would expect, we identify some more areas in England as being outliers regarding their happiness. It certainly feels somewhat arbitrary to choose a threshold of our liking. Despite its popularity, there are additional problems with this approach:

outliers affect our mean and standard deviation too,

since we use the mean, we assume that our data is normally distributed, and

in smaller samples, this approach might result in not identifying outliers at all (despite their presence) (Leys et al. 2013) (p. 764).

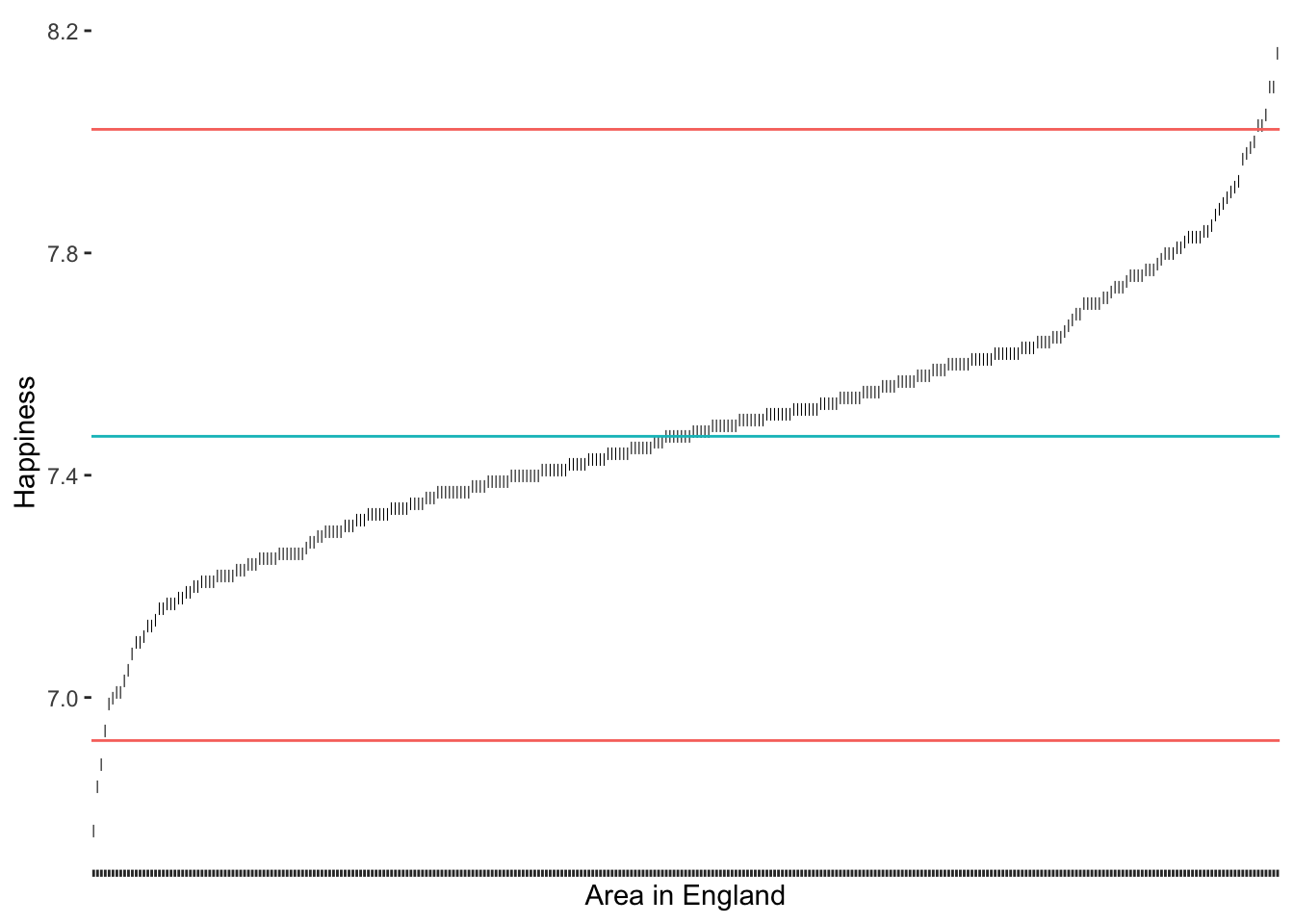

Leys et al. (2013) propose an alternative approach because medians are much less vulnerable to outliers than the mean. Similarly to the standard deviation, it is possible to calculate thresholds using the median absolute deviation (MAD). Best of all, the function mad() in R does this automatically for us. Leys et al. (2013) suggest using 2.5 times the MAD as a threshold. However, if we want to compare how well this option performs against the standard deviation, we could use 3 as well.

# Compute the median and thresholds

happiness_median <- median(hie_2021$happiness)

mad_upper <- happiness_median + 3 * mad(hie_2021$happiness)

mad_lower <- happiness_median - 3 * mad(hie_2021$happiness)

# Create our plot

outlier_plot +

geom_hline(aes(yintercept = happiness_median, col = "red"),

show.legend = FALSE) +

geom_hline(aes(yintercept = mad_upper, col = "blue"),

show.legend = FALSE) +

geom_hline(aes(yintercept = mad_lower, col = "blue"),

show.legend = FALSE)

3 * MAD as a threshold

Compared to our previous results, we notice that the median approach was much better in detecting outliers at the upper range of happiness. Because the median is not affected so much by the ‘extraordinarily happy areas of England’. Thus, the results have improved and seem more balanced. Still, one could argue that there are at least two more observations at the lower range of happiness that seem a little further away from the rest of the points on this S-curve. If we chose the criterion of 2.5 * MAD, we would also capture these outliers (see Figure 9.8).

2.5 * MAD as a threshold

Which approach to choose very much depends on the nature of your data. For example, one consideration could be that if the median and the mean of a variable are dissimilar, choosing the median might be the better option. However, we should not forget that the outliers we need to identify will affect the mean. Hence, it appears that in many cases, the median could be the better choice. Luckily, there is yet another highly popular method based on two quartiles rather than one (i.e. the median only).

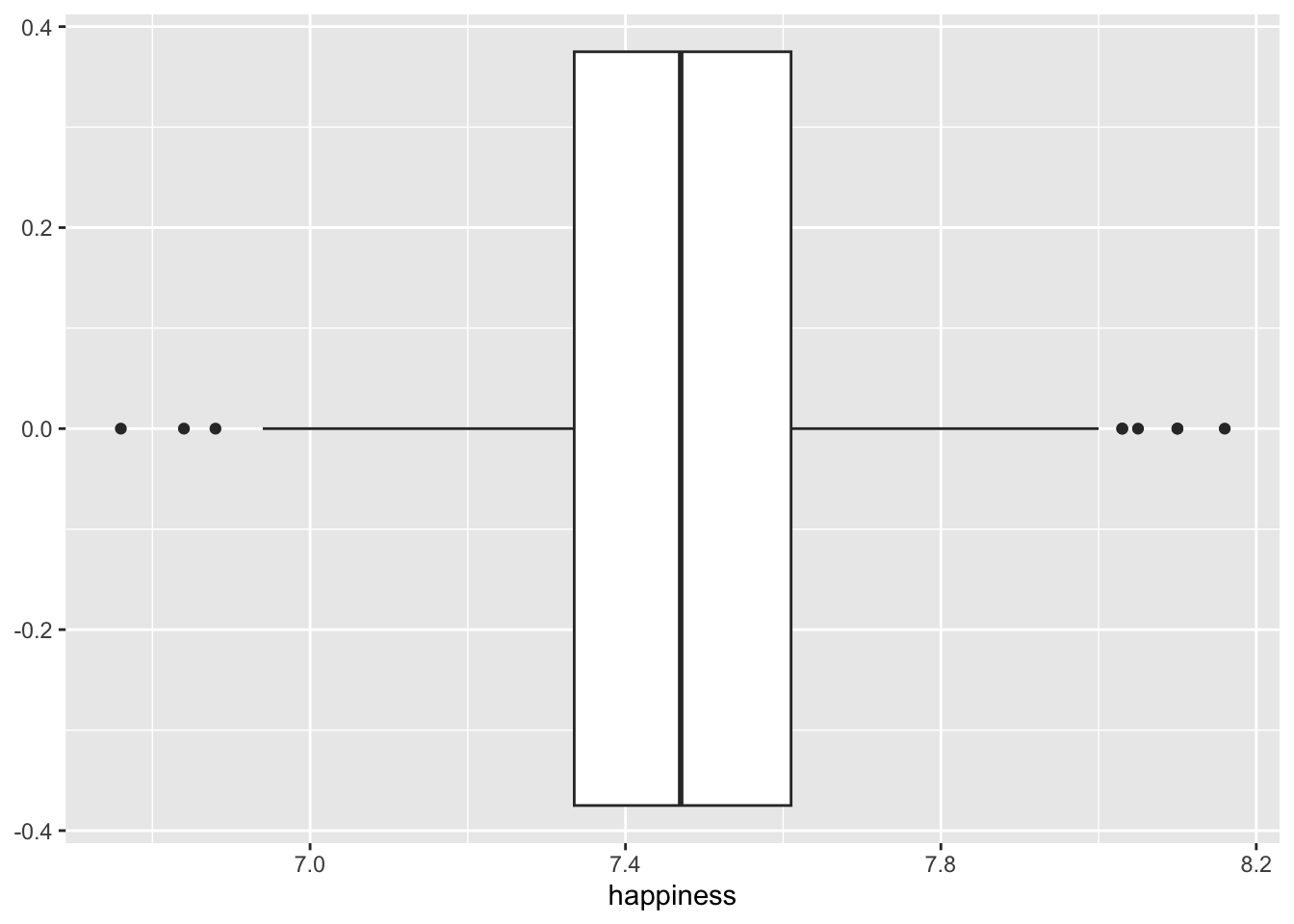

9.6.2 Detecting outliers using the interquartile range (IQR)

Another approach to classifying outliers is the use of the interquartile range (IQR). The IQR is used in boxplots and creates the dots at its ends to indicate any outliers. This approach is straightforward to implement because the computation of the IQR is simple:

\(IQR = Q_{3}-Q_{1}\)

Therefore, we can create new thresholds for the detection of outliers. For the IQR, it is common to use \(\pm 1.5 * IQR\) as the lower and upper thresholds measured from \(Q_1\) and \(Q_3\) respectively. To compute the quartiles we can use the function quantile(), which returns \(Minimum, Q_1, Q_2, Q_3, and\ Q_4\)

# Compute the quartiles

(happiness_quantiles <- quantile(hie_2021$happiness)) 0% 25% 50% 75% 100%

6.760 7.335 7.470 7.610 8.160 # Compute the thresholds

iqr_upper <- happiness_quantiles[4] + 1.5 * IQR(hie_2021$happiness)

iqr_lower <- happiness_quantiles[2] - 1.5 * IQR(hie_2021$happiness)

# Create our plot

outlier_plot +

geom_hline(aes(yintercept = happiness_median, col = "red"),

show.legend = FALSE) +

geom_hline(aes(yintercept = iqr_upper, col = "blue"),

show.legend = FALSE) +

geom_hline(aes(yintercept = iqr_lower, col = "blue"),

show.legend = FALSE)

1.5 * IQR as a threshold

As we can tell, the IQR method detects about the same outliers for our data as the median absolute deviation (MAD) approach. The outliers we find here are the same as shown in Figure 9.10 below.

hie_2021 |>

ggplot(aes(x = happiness)) +

geom_boxplot()

For our data, I would argue that the IQR and MAD method by Leys et al. (2013) produced the ‘best’ selection of outliers. However, we have to acknowledge that these classifications will always be subjective because how we position the thresholds depends on the researcher’s choice. Still, the computation is standardised, and we can plausibly explain the process of identifying outliers, we just have to make it explicit to the audience of our research.

9.6.3 Removing or replacing outliers

Now that we have identified our outliers, we are confronted with the question of what we should do with them. Like missing data (see Section 7.7), we can either remove them or replace them with other values. While removal is a relatively simple task, replacing it with other ‘reasonable’ values implies finding techniques to create such values. The same methods we used for missing data, especially multiple imputation (see Cousineau and Chartier 2010), can be used for replacing outliers. Of course, changing our dataset implies we are changing our results, so it is good practice to consider your analysis with and without outliers (e.g. Cohen, West, and Aiken (2014)). Consequently, running your analysis with two datasets can be a meaningful way to understand how much outliers affect your outcomes. Simply removing data without knowing how this might impact one’s results would be a poor decision. We should not forget that, especially with human participants, outliers can be genuine observations and should not simply be marginalised. If we know that our outliers are mistakes or respondents have intentionally provided wrong ratings in our dataset, we can certainly remove them. However, this is not always evident when detecting outliers. Thus, I recommend proceeding with caution when removing outliers. Besides, there are many alternatives to analysing your data, which are less biased by outliers, such as analytical tools that rely on the median instead of the mean. In this book, we primarily focus on conducting analysis without outliers. However, performing the analysis twice (with and without outliers) would be meaningful for your own research projects.

Irrespective of whether we remove or replace outliers, we somehow need to single them out of the crowd. Since the IQR strategy worked well for our data, we can use the thresholds we defined before, i.e. iqr_upper and iqr_lower. Therefore, an observation (i.e. an area in England) is considered an outlier if

its value lies above

iqr_upper, orits value lies below

iqr_lower

It becomes clear that we somehow need to define a condition because if it is an outlier, it should be labelled as one, but if not, then obviously we need to label the observation differently. Ideally, we want a new column in our dataset which indicates whether an area in England is an outlier (i.e. outlier == TRUE) or not (outlier == FALSE). R offers a way for us to express such conditions with the function ifelse(). It has the following structure:

ifelse(condition, TRUE, FALSE)

Let’s formulate a sentence that describes our scenario as an ifelse() function:

If an area’s

happinessis higher thaniqr_upper, orif an area’s

happinessis lower thaniqr_lower,classify this area as an outlier (i.e.

TRUE),otherwise, classify this area as not being an outlier (i.e.

FALSE).

We already know from Section 5.1 how to use logical and arithmetic operators. All we have to do is put them together in one function call and create a new column with mutate().

hie_2021_outliers <-

hie_2021 |>

mutate(outlier = ifelse(happiness > iqr_upper |

happiness < iqr_lower,

TRUE, FALSE))Since we have now a classification, we can more thoroughly filter for and inspect our outliers, i.e. we can see which areas in England are the ones that are lying outside our defined norm. We can arrange() our outliers by happiness to give the output even more meaning and improve readability

hie_2021_outliers |>

filter(outlier == "TRUE") |>

select(area_name, happiness, outlier) |>

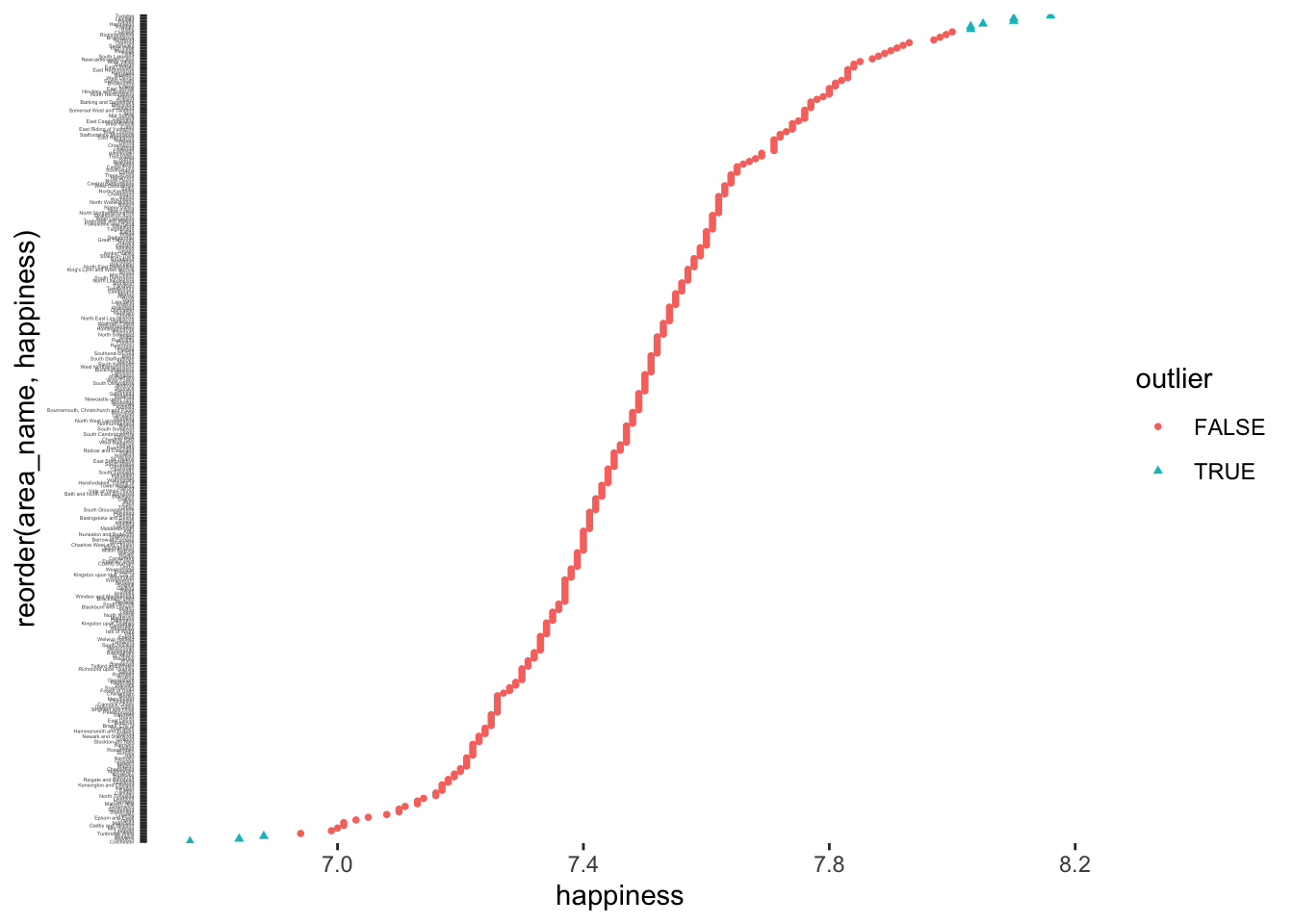

arrange(happiness)# A tibble: 9 × 3

area_name happiness outlier

<fct> <dbl> <lgl>

1 Colchester 6.76 TRUE

2 Redditch 6.84 TRUE

3 Norwich 6.88 TRUE

4 Blaby 8.03 TRUE

5 Ryedale 8.03 TRUE

6 Hambleton 8.05 TRUE

7 Pendle 8.1 TRUE

8 Lichfield 8.1 TRUE

9 Torridge 8.16 TRUE Given that the median of happiness for this dataset is 7.47, we can postulate that Colchester, Redditch and Norwich, are places in England that score considerably below the majority of the rest. However, places like Torridge, Lichfield and Pendle, can be considered particularly happy places in England. This list of areas in England also provides the answer to our research question at the beginning of this section about the happiest and least happiest areas in England.

From here, it is simple to remove these areas in England (i.e. keep the areas that are not outliers) or set their values to NA. If we replace() the values with NA, we can continue with one of the techniques demonstrated for missing values in Section 7.7. Both approaches are shown in the following code chunk.

# Keep all observations but outliers

hie_2021_outliers |>

filter(outlier == "FALSE") |>

select(area_name, outlier)# Replace values with NA

hie_2021_outliers |>

mutate(happiness = replace(happiness, outlier == "TRUE", NA)) |>

select(area_name, happiness)# A tibble: 307 × 2

area_name happiness

<fct> <dbl>

1 Hartlepool 7.54

2 Middlesbrough 7.41

3 Redcar and Cleveland 7.46

4 Stockton-on-Tees 7.23

5 Darlington 7.35

6 Halton 7.36

7 Warrington 7.38

8 Blackburn with Darwen 7.36

9 Blackpool 7.43

10 Kingston upon Hull, City of 7.38

# ℹ 297 more rowsThe replace() function is very intuitive to use. It first needs to know where you want to replace a value (happiness), then what the condition for replacing is (outlier == "TRUE"), and lastly, which value should be put instead of the original one (NA).

9.6.4 Concluding remarks about outliers

As you hopefully noticed, understanding your data requires some effort, but it is important to know your data well before proceeding to any further analysis. You can experiment with different data visualisations and design them in a way that best reflects the message you want to get across. For example, because we have added a new variable that classifies outliers, we can do more with our data visualisation than before and highlight them more clearly.

hie_2021_outliers |>

ggplot(aes(x = reorder(area_name, happiness),

y = happiness,

col = outlier,

shape = outlier)

) +

geom_point(size = 1) +

theme(panel.background = element_blank(),

axis.text.y = element_text(size = 2)) +

coord_flip()

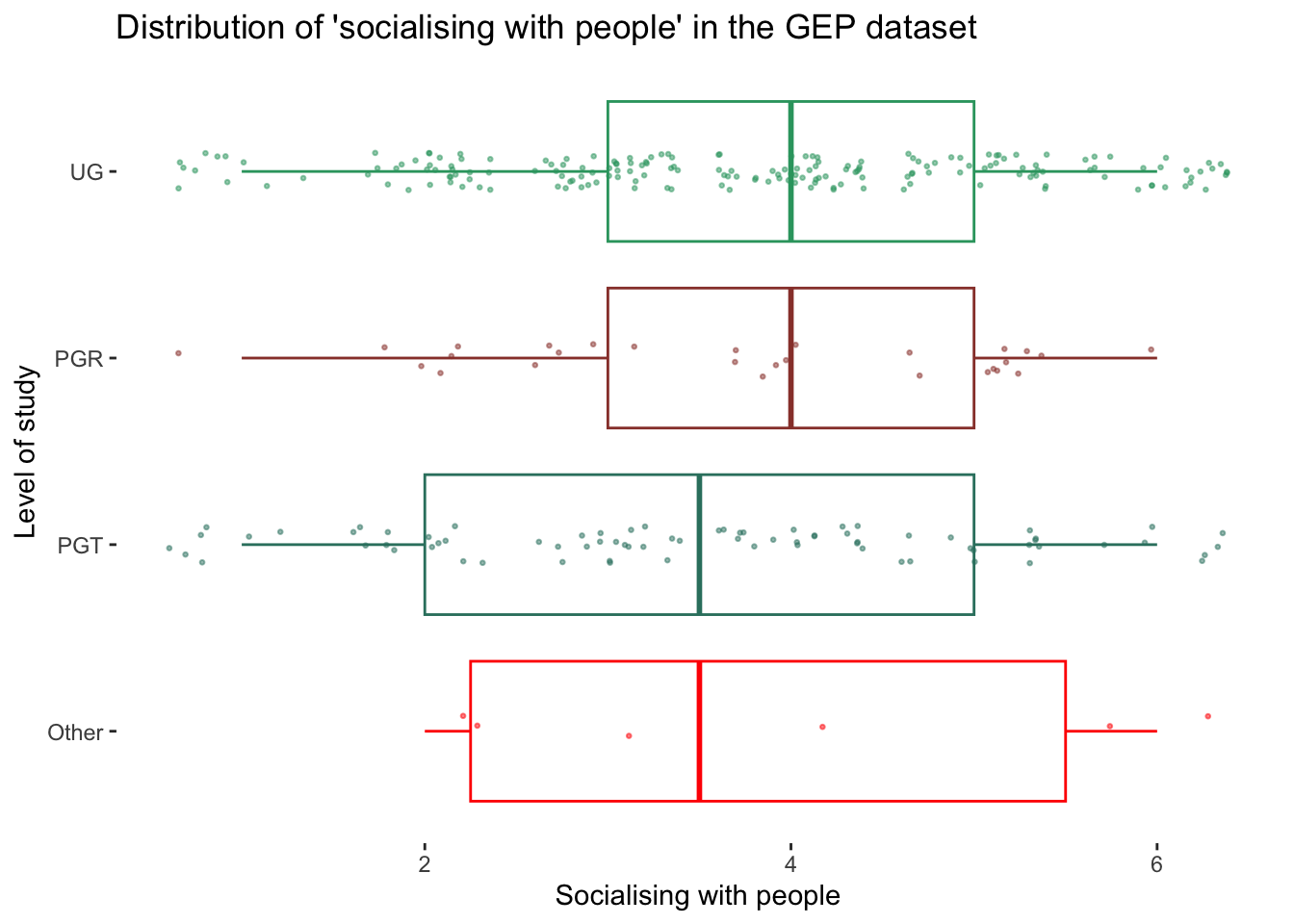

happiness showing outlierst as blue trianglesTo round things off, let me share with you one more visualisation, which demonstrates that outliers might also have to be defined based on specific groupings of your data. For example, if we look at the gep dataset that we used before, we could compare distributions based on level_of_study e.g. UG, PGT, PGR or Other. Thus, it might be essential to consider outliers in light of sub-samples of your data rather than the entire dataset, especially when comparing groups (see Chapter 11).

# The wesanderson package can make your plots look more

# 'Wes Anderson'

colour_pal <-

wesanderson::wes_palette("Darjeeling1",

11,

type = "continuous")

gep |>

ggplot(aes(x = reorder(level_of_study,

si_socialise_with_people_exp,

FUN = median),

y = si_socialise_with_people_exp,

col = level_of_study)

) +

geom_boxplot(alpha = 0,

show.legend = FALSE) +

geom_jitter(width = 0.1,

size = 0.5,

alpha = 0.5,

show.legend = FALSE

) +

scale_color_manual(values = colour_pal) +

coord_flip() +

theme(panel.background = element_blank()) +

xlab("Level of study") +

ylab("Socialising with people") +

ggtitle("Distribution of 'socialising with people' (GEP dataset)")

9.7 Exercises

We have covered quite some ground regarding different sources of bias in this section of the book. Start the exercises below to reinforce what you have learned in this chapter. Make sure you have the r4np package installed.

# Option 1:

learnr::run_tutorial("ex_sources_of_bias", package = "r4np")